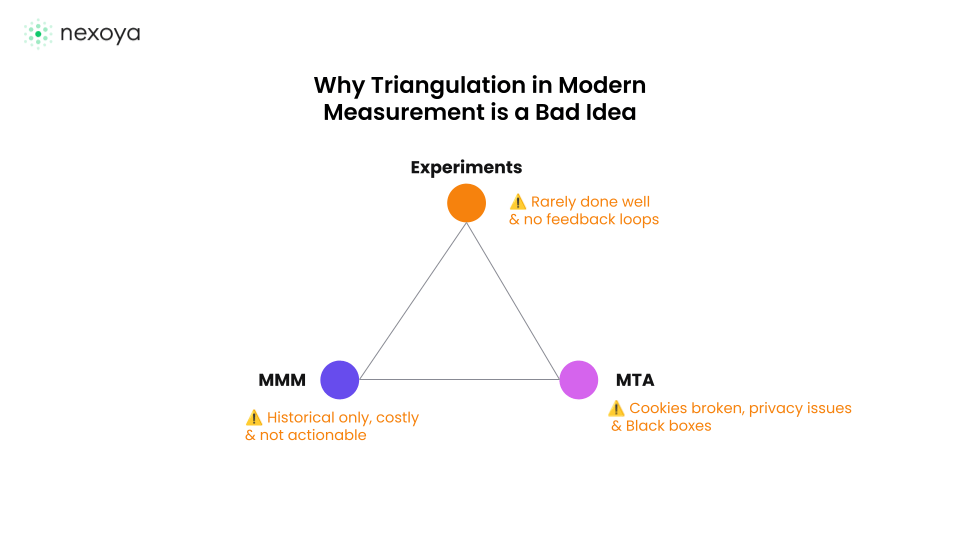

Marketers are under increasing pressure to prove ROI, navigate privacy regulations (e.g. GDPR, CCPA, etc.), and justify spend in ads. The idea of measurement triangulation, combining Marketing Mix Modelling (MMM), Multi-Touch Attribution (MTA), plus experiments/incrementality testing, seems like a great idea. Google’s Modern Measurement Playbook even frames “using all three” as part of modern measurement.

However, layering all these approaches doesn’t guarantee clarity; it often creates confusion, misallocation, and significant effort for the analytics team, where nobody ultimately benefits. We tried to help various brands get the triangulation setup in the past 16 months, but to be clear: it isn’t that simple and most likely, you end up making some assumptions and have no proper fundamentals. Here is a summary of five reasons why over-relying on triangulation can backfire and what to do instead.

1. Your MTA is broken (and you know it, right? 😉)

The multi-touch-attribution (MTA) plays an important role in the triangulation theory, as it’s one of the pillars, but additionally, the one which gives the most granularity to the mix. But it has limitations; today’s privacy/consent managed and cookie-free environments, plus the technological changes (i.e. iOS27 rumours on UTM/gclid/mclid removals) make it difficult:

- Cross-device journeys, view-through conversions are missing or are undercounted

- Attribution results differ widely depending on the MTA used (i.e. Last click, simplistic models with a lot of bias)

- Data delays/sampling issues

- MTA covers only your online channels

If your MTA has a blind spot, it drags down the whole triangulation or simply said, it’s impossible to do it. And with no-consent rates of sometimes >50%, its clear that there is not enough data evidence to use this as a reasonable basis.

2. Experiments Are Rarely Done Well

The triangulation theory emphasises incremental testing as a key to modern measurements. It’s the trendy kid in the measurement area. The idea is that experiments help to improve the Priors in the Marketing Mix Modelling (MMM) as well as bring more changes into the overall data, and with that, better statistical evidence.

Challenges in practice which we often see, even in large brands:

- Silo experiments: You run an uplift story in Meta or A/B in Google, that’s great. But it’s often not enough to really take into account better Priors for the MMM.

- Underpowered Experiments: Often, the sample sizes or duration of experiments are just too short. Seasonal or external changes are not under control. Statistical power tests are not done before (what amount of data do we need to make it significant).

- Close the loop: very often, the results are not integrated back into the MMM or attribution models to be calibrated. Without this feedback, the “truth” is siloed.

- Repeat-Repeat-Repeat: almost no brand we see has a rigorous experimentation plan/roadmap which they really execute. But, to be frank, you would need that. It’s essential to do this often.

3. MMM Alone Shouldn’t Be the Basis for Budget Shifts

Marketing Mix Models (MMM) are a powerful strategic tool for long-term insights based on historical facts. But it has its limits when it comes to triangulation:

- It relies solely on historical data. This often does not reflect the current or near-future (i.e. events, changes in publishers, etc.)

- Aggregated data on channel level misses finer levers which are important (i.e. campaign tactic, SEA brand vs. SEA non-brand)

- It’s slow: the lag in collecting, cleaning and modelling across digital, offline, etc. means it can’t be used for quick pivots.

Using MMM alone to reallocate the budget based on what happened in the past is dangerous.

4. Conflicting Signals Lead to Decision Paralysis

What is called in the modern measurement framework a “layered approach” is the combination of all signals, where the strengths of one are complemented by the weaknesses of the other. But to be very blunt, this layering isn’t at all so harmonic as it sounds 😉. Some examples;

- MMM might show TV is underrated, but experiments show the opposite. Do you know how to adjust the Priors in the MMM for the future or set up a new experiment?

- Teams competition is heating up: MMM says A wins, MTA says B wins, Experiment says C wins – often these roles are also in different teams, which leads to friction. A very clear governance needs to be in place, and a high knowledge of the teams.

5. Calibration & Execution is Not Done

What we see in practice, most of all, because of all the points above, is that you don’t calibrate and actually change your budgets. Most brands never calibrate across models and with that never see the actual results combined. Without calibration, triangulation collapses into three disconnected truths. You are aware that the cost of inaction is here. And it’s dangerous not to take into account these things, so it has an urgency. But how do you want to solve that?

And in addition to that;

- Building and maintaining all three measurement systems (experiments, MMM, attribution) consumes engineering/analytics bandwidth, time, and tool investment.

- The complexity can obscure transparency: which model assumptions, what latency, what biases? Stakeholders may distrust or misunderstand the results.

- Danger of false confidence: We use all three, so we’re safe. But if one input is very bad (i.e. your MTA, as you know 🙃), it can pull the combined output off course.

- Manually changing budgets is hard and difficult, especially if you change target-biddings.

Conclusion (or TL;DR if you like this better)

Triangulation isn’t a bad thing, but it’s incredibly hard to do right, and most organisations are doing a bad job on it. Without great governance and design, it results in more confusion than resolution. Without well-designed experiments, clean data, clear decision rules, and strong governance, layering MMM, MTA, and experiments more often multiplies confusion than resolves it. This is a big risk in how you interpret your results within your marketing department.

What to Do Instead: A Better Path Forward

We’ve been investing a lot in this topic in the past 16 months, also in helping customers to make their MMM actionable, for instance. But it’s clear to me that triangulation is not a way to make this work in a straightforward way.

That’s why we started exploring a novel way of doing attribution. Instead of stitching MMM + MTA + experiments, we reframe every budget shift as a mini-experiment and feed that into regression-based attribution. The results, as seen with Generali (+18.8% more leads), are impressive.

In my next post, I’ll share how regression-based attribution with included weekly-budget experiments works in practice and why it might be the first real alternative to triangulation